Automate Your Kubernetes Cluster Setup Using Ansible: A Comprehensive Tutorial

- Rafael Natali

- Aug 22, 2023

- 4 min read

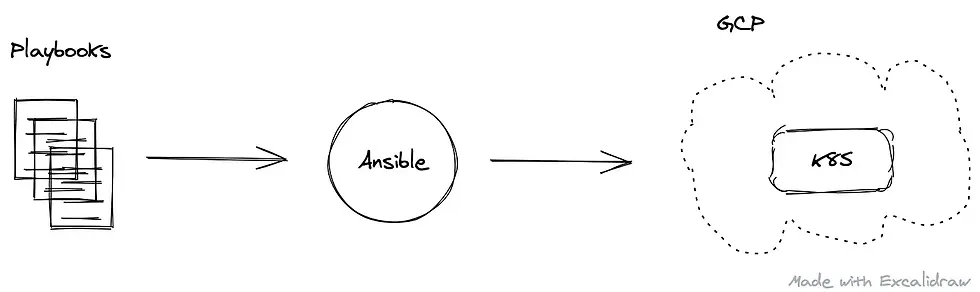

Using Ansible to install, setup, and configure a Google Kubernetes Cluster (GKE) on Google Cloud Platform (GCP).

Automating the setup of a GKE cluster

As I briefly described in this article, Infrastructure as Code (IaC)¹ is paramount to maintain consistency across different environments. IaC resolves the environment drift issue where each environment has unique configurations that are not reproducible automatically.

The code used to create this tutorial is available in this repository.

Ansible² is the tool of choice to implement this tutorial. It helps us to create the necessary code to provision a basic Kubernetes cluster on GCP (GKE)³ automation.

Ansible Directory Layout

Follow is the directory structure we will be working with through this tutorial.

.

└── ansible # top-level folder

├── ansible.cfg # config file

├── create-k8s.yml # playbook to provision env

├── destroy-k8s.yml # playbook to destroy env

├── inventory

│ └── gcp.yml # inventory file

└── roles

├── destroy_k8s # role to remove k8s cluster

│ └── tasks

│ └── main.yml

├── destroy_network # role to remove VPC

│ └── tasks

│ └── main.yml

├── k8s # role to create k8s cluster

│ └── tasks

│ └── main.yml

└── network # role to create VPC

└── tasks

└── main.ymlAnsible Inventory

To begin with, create a yaml file in the ansible/inventory folder. This Ansible inventory⁴ file defines the variables that are going to be available for our code.

Here is a sample file:

all:

vars:

# use this section to enter GCP related information

zone: europe-west2-c

region: europe-west2

project_id: <gcp-project-id>

gcloud_sa_path: "~/gcp-credentials/service-account.json"

credentials_file: "{{ lookup('env','HOME') }}/{{ gcloud_sa_path }}"

gcloud_service_account: service-account@project-id.iam.gserviceaccount.com

# use the section below to enter k8s cluster related information

cluster_name: <name for your k8s cluster>

initial_node_count: 1

disk_size_gb: 100

disk_type: pd-ssd

machine_type: n1-standard-2Ansible Roles

After the inventory file, use Ansible roles⁵ to configure the Ansible tasks⁶ to provision our cluster. For this tutorial, I created two roles:

ansible/roles/network — to provision a Virtual Private Cloud (VPC)

ansible/roles/k8s — to provision the Kubernetes cluster

It’s not mandatory to have a separate network for your cluster. I created it because I didn’t want to use the default network.

Below is the yaml configuration file for the network role:

---

- name: create GCP network

google.cloud.gcp_compute_network:

name: network-{{ cluster_name }}

auto_create_subnetworks: 'true'

project: "{{ project_id }}"

auth_kind: serviceaccount

service_account_file: "{{ credentials_file }}"

state: present

register: networkThe task uses the plugin⁷ google.cloud.gcp_compute_network to create the VPC network. The Jinja2 variables — {{ }} — are replaced by the values entered in the inventory file created early on.

In the variable network, it’s registered the output of this task using the task keyword register. The variable network is required during the cluster creation.

The next task is the one that provisions the Kubernetes cluster. This task uses two plugins: google.cloud.gcp_container_cluster and google.cloud.gcp_container_node_pool.

The former creates the Google Kubernetes Engine cluster, and the latter creates the node pools. Node pools are a set of nodes, i.e., Virtual Machines, with a common configuration and specification under the control of the cluster master.

Below is the yaml configuration file for the k8s role:

---

- name: create k8s cluster

google.cloud.gcp_container_cluster:

name: "{{ cluster_name }}"

initial_node_count: "{{ initial_node_count }}"

location: "{{ zone }}"

network: "{{ network.name }}"

project: "{{ project_id }}"

auth_kind: serviceaccount

service_account_file: "{{ credentials_file }}"

state: present register: cluster - name: create k8s node pool

google.cloud.gcp_container_node_pool:

name: "node-pool-{{ cluster_name }}"

initial_node_count: "{{ initial_node_count }}"

cluster: "{{ cluster }}"

config:

disk_size_gb: "{{ disk_size_gb }}"

disk_type: "{{ disk_type }}"

machine_type: "{{ machine_type }}"

location: "{{ zone }}"

project: "{{ project_id }}"

auth_kind: serviceaccount

service_account_file: "{{ credentials_file }}"

state: presentNote that the value for the parameter network in the create k8s cluster task comes from the registered output of the role network.

Ansible Playbook

The last step is to create an Ansible playbook⁸ to execute both roles. In the ansible folder, I created a file called create-k8s.yml:

---

- name: create infra

hosts: localhost

gather_facts: false

environment:

GOOGLE_CREDENTIALS: "{{ credentials_file }}"

roles:

- network

- k8sNow, we can provision our Kubernetes cluster with the following command:

ansible-playbook ansible/create-gke.yml -i ansible/inventory/<your-inventory-filename>Output:

PLAY [create infra] ****************************************************************

TASK [network : create GCP network] ****************************************************************

changed: [localhost]

TASK [k8s : create k8s cluster] ****************************************************************

changed: [localhost]

TASK [k8s : create k8s node pool] ****************************************************************

changed: [localhost]

PLAY RECAP ****************************************************************

localhost: ok=3 changed=3 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

Connecting to the Kubernetes cluster

Use the gcloud⁹ command-line tool to connect to the Kubernetes cluster:

gcloud container clusters get-credentials <cluster_name> --zone <zone> --project <project_id>Note: replace the variables with the values used in the inventory file. Also, It’s possible to retrieve this command from the GCP console.

Output:

Fetching cluster endpoint and auth data.

kubeconfig entry generated for <project_id>.Using the Kubernetes cluster

After connecting to the cluster use the kubectl¹⁰ command-line tool to control the cluster or the Google Cloud console.

kubectl get nodes

NAME STATUS ROLES AGE VERSION

gke-<cluster_name>-node-pool-e058a106-zn2b Ready <none> 10m v1.18.12-gke.1210Cleaning up

To delete the Kubernetes cluster, create a similar structure with a playbook, roles, and tasks. Basically, the only change required is that the state in each task must be set to absent.

Playbook file created at ansible/destroy-k8s.yml:

---

- name: destroy infra

hosts: localhost

gather_facts: false

environment:

GOOGLE_CREDENTIALS: "{{ credentials_file }}"

roles:

- destroy_k8s

- destroy_network Configuring different roles and tasks for network and k8s enable us to destroy the Kubernetes and preserve the VPC, if we want to.

Roles and tasks created at ansible/roles:

destroy_network/tasks:

---

- name: destroy GCP network

google.cloud.gcp_compute_network:

name: network-{{ cluster_name }}

auto_create_subnetworks: 'true'

project: "{{ project_id }}"

auth_kind: serviceaccount

service_account_file: "{{ credentials_file }}"

state: absentdestroy_k8s/tasks:

- name: destroy k8s cluster

google.cloud.gcp_container_cluster:

name: "{{ cluster_name }}"

location: "{{ zone }}"

project: "{{ project_id }}"

auth_kind: serviceaccount

service_account_file: "{{ credentials_file }}"

state: absentExecute the playbook to delete both network and k8s resources:

ansible-playbook ansible/destroy-gke.yml -i ansible/inventory/<your-inventory-filename>Output:

PLAY [destroy infra] *****************************************************************

TASK [destroy_k8s : destroy k8s cluster] *****************************************************************

changed: [localhost]

TASK [destroy_network : destroy GCP network] *****************************************************************

changed: [localhost]

PLAY RECAP *****************************************************************

localhost: ok=2 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

Conclusion

This tutorial has demonstrated how to use Infrastructure as Code to install, setup, and configure a simple Kubernetes cluster on Google Cloud Platform. Also shown essential Ansible concepts like roles and playbook, and how to configure and structure them to provision and remove resources created in the cloud.

Much more is possible with Ansible. Use this tutorial as a starting point to organize your Kubernetes configurations such as namespaces, deploys, policies as code. Access the Ansible Documentation page for more information and to expand your Ansible knowledge and usage.

Next steps

I have created a follow-up article to demonstrate how to use Ansible to deploy applications in the Kubernetes cluster created here.

You can find the article here.

References

https://docs.microsoft.com/en-us/azure/devops/learn/what-is-infrastructure-as-code

https://docs.ansible.com/ansible/latest/user_guide/intro_inventory.html

https://docs.ansible.com/ansible/latest/user_guide/playbooks_reuse_roles.html

https://docs.ansible.com/ansible/latest/user_guide/basic_concepts.html#tasks

https://docs.ansible.com/ansible/latest/collections/google/cloud/index.html

https://docs.ansible.com/ansible/latest/user_guide/playbooks_intro.html

Comments