Using Terraform to manage multiple GCP resources

- Rafael Natali

- Aug 10, 2023

- 6 min read

Updated: Aug 11, 2023

This post was originally posted at Medium

The goal of this article is to present a Terraform code that creates multiple buckets, in multiple locations, and with multiple IAM permissions.

This piece of code was used to solve the problem described in the Problem Statement section.

The implementation strategy may vary from one case to the other and are not discussed in depth here.

Problem Statement

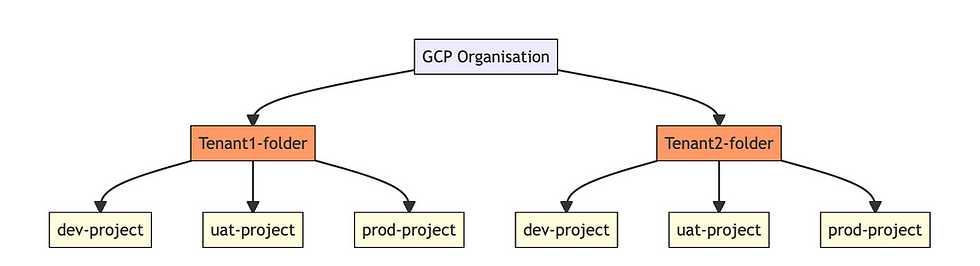

I was faced with the following challenge the other day, where we have a multi-tenant environment on GCP and want to use Infrastructure-as-Code (IaC) to manage the resources created in different projects and environments - dev/uat/prod.

Requirements:

Both the IT team and tenants must execute the IaC whenever necessary.

Tenants must not be required to know terraform or GCP nomenclature.

Each tenant has its own GitHub repository and from it you need to manage all the resources.

Use GCP Predefined roles

Create multiple buckets in multiple locations

Tenants can assign permission to GCP service accounts that belong to different projects, i.e. tenant1 can assign viewer permission to a tenant2 service account.

Architecture example:

Proposed Solution

Create a terraform module to meet the requirements. You can find the full terraform code in the following GitHub repository. The module was tested using Terraform v1.1.7 with Terraform Google Provider v4.13.0 on MacOs Monterey 12.2.1.

terraform {

required_version = "~> 1.1.7"

required_providers {

google = {

source = "hashicorp/google"

version = "4.13.0"

}

}

}This module makes it easy to create one or more GCS buckets, and assign basic permissions.

The resources that this module will create/trigger are:

One or more GCS buckets

Zero or more IAM bindings for those buckets

This module was written to assign permissions to GCP service accounts. One can follow the same logic to extend it to groups and users.

How it works

For example purposes, a Terraform module was created in the sub-folder cloud-storage-modules. In a real scenario, you can create the module in a separate GitHub repository and call it from whenever necessary.

The module is configured to receive the variables defined in the local terraform.tfvars.json. Creating the buckets and assigning the roles as defined.

Refer to the module documentation for a detailed explanation on the module.

This module does not create roles. It uses the GCP Predefined roles that already exists.

Usage

Basic usage of this modules is as follows and can be found in the main.tf file.

terraform.tfvars.json

This is the variables file used to created the resources. This section describes how to declare the buckets.

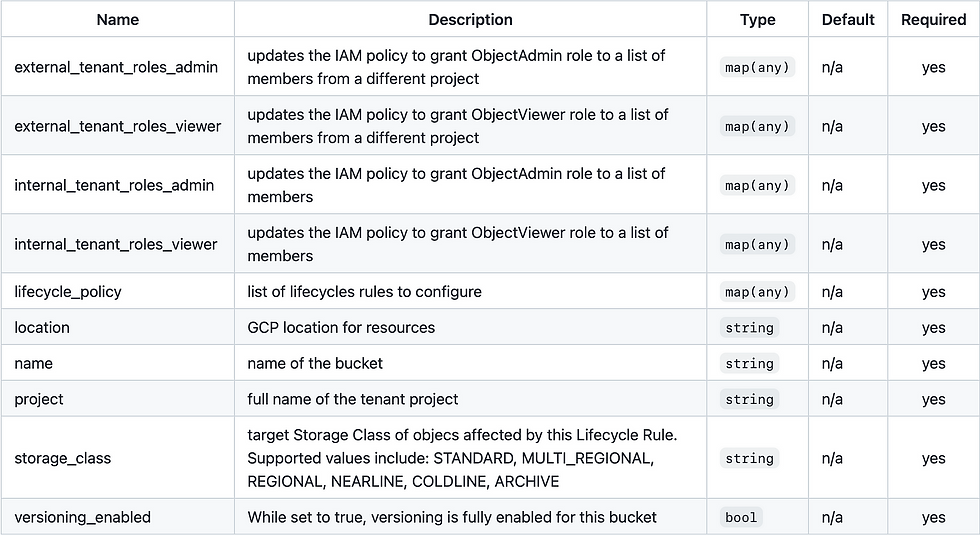

The table below describes the input variables for the module.

Explaining the gcs_bucket module

This section describes line-by-line how the gcs_bucket module works.

The label immediately after the module keyword is a local name. The source argument is mandatory for all modules. Meaning we are using the terraform code present in the cloud-storage-module folder. The for_each meta-argument accepts a map and creates an instance for each item in the map. Each instance has a distinct infrastructure object associated with it, and each is separately created, updated, or destroyed when the configuration is applied. In practice, the for_each allow us to create 1 to N buckets.

module "gcs_bucket" {

for_each = var.gcs_buckets

source = "./cloud-storage-module"location is the key of the gcs_buckets variable in the terraform.tfvars.json.

location = each.keyproject is the equivalent entry in the terraform.tfvars.json.

project = var.projectname is the combination of the name entry of the gcs_buckets variable in the terraform.tfvars.json with the location and a random id to make the bucket name unique.

name = "${each.value.name}-${each.key}-${random_id.bucket.hex}"Both storage_class and versioning_enabled use the simple conditional structure. If a value is specified in the terraform.tfvars.json use it, otherwise use the default value defined in the variables.tf file.

storage_class = each.value.storage_class != "" ? each.value.storage_class : var.storage_class

versioning_enabled = each.value.versioning_enabled != "" ? each.value.versioning_enabled : var.versioning_enabledWhen properly defined in the terraform.tfvars.json a lifecycle management configuration is added to the bucket.

lifecycle_policy = each.value.lifecycle_ruleThe variables internal_tenant_roles and external_tenant_roles refer to IAM policy for Cloud Storage Bucket within and outside the tenant's project respectively.

internal_tenant_roles_admin = each.value.internal_tenant_roles_admin

internal_tenant_roles_viewer = each.value.internal_tenant_roles_viewer

external_tenant_roles_admin = each.value.external_tenant_roles_admin

external_tenant_roles_viewer = each.value.external_tenant_roles_viewer

}Explaining the management of IAM policies

The management of IAM policies deserves a separate topic because it’s not straight-forward terraform configuration. An important requirement for the automation is to keep it as simple as possible for the tenants and to be re-usable in different environments. Therefore, from the tenant point-of-view all configuration necessary is:

Internal roles:

"internal_tenant_roles_admin": {

"objectAdmin": {

"service_accounts": ["platform-infra", "platform-ko"]

}

},

"internal_tenant_roles_viewer": {

"objectViewer": {

"service_accounts": ["viewer-infra", "viewer-ko"]

}

}External roles:

"external_tenant_roles_admin": {

"objectAdmin": [

{

"project": "tenant2",

"service_accounts": ["platform-infra", "platform-ko"]

}

]

},

"external_tenant_roles_viewer": {

"objectViewer": [

{

"project": "tenant2",

"service_accounts": ["viewer-infra", "viewer-ko"]

}

]

}The tenant only provide the role and a short name for the service account. However, the automation needs to complete the names with the GCP fully qualified name, like:

serviceAccount:platform-infra@tenant1-dev.iam.gserviceaccount.com

This transformation is done locally in the main.tf file in the locals block. The logic is similar for both internal and external resources.

A new object <internal/external>_roles_fully_qualified_<admin/viewer> is created as the result of a for in the respective variable. Inside the for it does another for for each service_accounts entry. The coalesce function is used to return all non-empty values in the respective entry. For each valid entry it replaces it with the correct project and the value (v) is inputed by the tenant.

internal_roles_fully_qualified_admin = {

for tenant_role, entities in var.internal_tenant_roles_admin :

tenant_role => {

service_accounts : [for k, v in coalesce(entities["service_accounts"], []) : "serviceAccount:${v}@${var.project}.iamgserviceaccount.com"]

}

}Particularly for the external roles the flatten is used to integrate the projects entry.

external_roles_fully_qualified_admin = {

for tenant_role, entries in var.external_tenant_roles_admin :

tenant_role => {

service_accounts = flatten([

for entry in entries : [

for k, v in coalesce(entry["service_accounts"], []) :

"serviceAccount:${v}@${entry["project"]}.iam.gserviceaccount.com"

]

])

}

}

Terraform Plan output

This is the terraform plan output using the example terraform.tfvars.json file you can find in the GitHub repository.

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# random_id.bucket will be created

+ resource "random_id" "bucket" {

+ b64_std = (known after apply)

+ b64_url = (known after apply)

+ byte_length = 8

+ dec = (known after apply)

+ hex = (known after apply)

+ id = (known after apply)

+ keepers = {

+ "bucket_id" = "tenant1-dev"

}

}

# module.gcs_bucket["eu"].google_storage_bucket.bucket will be created

+ resource "google_storage_bucket" "bucket" {

+ force_destroy = true

+ id = (known after apply)

+ location = "EU"

+ name = (known after apply)

+ project = "tenant1-dev"

+ requester_pays = false

+ self_link = (known after apply)

+ storage_class = "STANDARD"

+ uniform_bucket_level_access = true

+ url = (known after apply)

+ lifecycle_rule {

+ action {

+ type = "Delete"

}

+ condition {

+ age = 1

+ matches_storage_class = []

+ with_state = (known after apply)

}

}

+ versioning {

+ enabled = true

}

}

# module.gcs_bucket["eu"].google_storage_bucket_iam_member.admin-member-bucket-external["serviceAccount:platform-infra@tenant2-dev.iam.gserviceaccount.com"] will be created

+ resource "google_storage_bucket_iam_member" "admin-member-bucket-external" {

+ bucket = (known after apply)

+ etag = (known after apply)

+ id = (known after apply)

+ member = "serviceAccount:platform-infra@tenant2-dev.iam.gserviceaccount.com"

+ role = "roles/storage.objectAdmin"

}

# module.gcs_bucket["eu"].google_storage_bucket_iam_member.admin-member-bucket-external["serviceAccount:platform-ko@tenant2-dev.iam.gserviceaccount.com"] will be created

+ resource "google_storage_bucket_iam_member" "admin-member-bucket-external" {

+ bucket = (known after apply)

+ etag = (known after apply)

+ id = (known after apply)

+ member = "serviceAccount:platform-ko@tenant2-dev.iam.gserviceaccount.com"

+ role = "roles/storage.objectAdmin"

}

# module.gcs_bucket["eu"].google_storage_bucket_iam_member.admin-member-bucket-internal["serviceAccount:platform-infra@tenant1-dev.iam.gserviceaccount.com"] will be created

+ resource "google_storage_bucket_iam_member" "admin-member-bucket-internal" {

+ bucket = (known after apply)

+ etag = (known after apply)

+ id = (known after apply)

+ member = "serviceAccount:platform-infra@tenant1-dev.iam.gserviceaccount.com"

+ role = "roles/storage.objectAdmin"

}

# module.gcs_bucket["eu"].google_storage_bucket_iam_member.admin-member-bucket-internal["serviceAccount:platform-ko@tenant1-dev.iam.gserviceaccount.com"] will be created

+ resource "google_storage_bucket_iam_member" "admin-member-bucket-internal" {

+ bucket = (known after apply)

+ etag = (known after apply)

+ id = (known after apply)

+ member = "serviceAccount:platform-ko@tenant1-dev.iam.gserviceaccount.com"

+ role = "roles/storage.objectAdmin"

}

#

module.gcs_bucket["eu"].google_storage_bucket_iam_member.viewer-member-bucket-external["serviceAccount:viewer-infra@tenant2-dev.iam.gserviceaccount.com"] will be created

+ resource "google_storage_bucket_iam_member" "viewer-member-bucket-external" {

+ bucket = (known after apply)

+ etag = (known after apply)

+ id = (known after apply)

+ member = "serviceAccount:viewer-infra@tenant2-dev.iam.gserviceaccount.com"

+ role = "roles/storage.objectViewer"

}

# module.gcs_bucket["eu"].google_storage_bucket_iam_member.viewer-member-bucket-external["serviceAccount:viewer-ko@tenant2-dev.iam.gserviceaccount.com"] will be created

+ resource "google_storage_bucket_iam_member" "viewer-member-bucket-external" {

+ bucket = (known after apply)

+ etag = (known after apply)

+ id = (known after apply)

+ member = "serviceAccount:viewer-ko@tenant2-dev.iam.gserviceaccount.com"

+ role = "roles/storage.objectViewer"

}

# module.gcs_bucket["eu"].google_storage_bucket_iam_member.viewer-member-bucket-internal["serviceAccount:viewer-infra@tenant1-dev.iam.gserviceaccount.com"] will be created

+ resource "google_storage_bucket_iam_member" "viewer-member-bucket-internal" {

+ bucket = (known after apply)

+ etag = (known after apply)

+ id = (known after apply)

+ member = "serviceAccount:viewer-infra@tenant1-dev.iam.gserviceaccount.com"

+ role = "roles/storage.objectViewer"

}

# module.gcs_bucket["eu"].google_storage_bucket_iam_member.viewer-member-bucket-internal["serviceAccount:viewer-ko@tenant1-dev.iam.gserviceaccount.com"] will be created

+ resource "google_storage_bucket_iam_member" "viewer-member-bucket-internal" {

+ bucket = (known after apply)

+ etag = (known after apply)

+ id = (known after apply)

+ member = "serviceAccount:viewer-ko@tenant1-dev.iam.gserviceaccount.com"

+ role = "roles/storage.objectViewer"

}

Plan: 10 to add, 0 to change, 0 to destroy.As we can see from the output one bucket will be created in the EU region of the tenant1-dev project. The IAM permissions will be assigned as:

objectAdmin to the service accounts platform-infra and platform-ko from both tenant1-dev and tenant2-dev projects.

objectViewer to the service accounts viewer-infra and viewer-ko from both tenant1-dev and tenant2-dev projects.

Comments